As part of my drive to get comfortable with Python I decided to build a script to easily archive files to Amazon Glacier. Since I am cautious and perhaps a little paranoid, I wanted to make sure that the archives were encrypted. Next, since Glacier is cheap to send files too but expensive to restore from I wanted to make sure I could bring back my archives in smaller chunks as well as keep an inventory of what I have uploaded.

So my script allows you to do the following:

- Create Glacier Vaults

- Delete Glacier Vaults

- Inventory / View the inventory of a Glacier Vault

- Keep track of Glacier connection information

- Recursively archive a directory

- Capture data on all files archived (Modified Time, Owner of file, Size of file, Path to file, File type by extension) to SQLite database.

- Archive data to a .tar.gz until the .tar.gz reaches a certain size. Then create a new .tar.gz

- This is to ensure downloads for restores are smaller

- Encrypt .tar.gz before uploading file to Glacier

- Upload file to Glacier

- Track the vault name in SQLite database

- TODO: Query database for file information

- TODO: Initiate restores based on database queries.

Requirements

To install the basic requirements you will need the following packages:

- beefish and pycrypto for encryption

- boto for AWS integration

- argparse (if not already installed)

You can install everything using pip:

pip install beefish pycrypto argparse boto

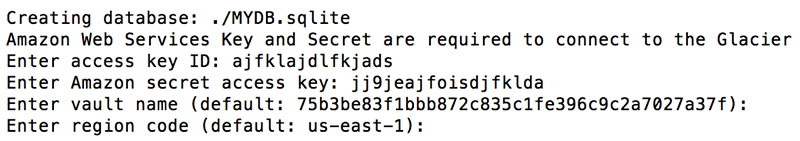

Database

The script storage-archive.py requires a SQLite database to do just about anything so most commands will create one for you under the directory you are running the command from unless you specify a database by using the -db option. When initially setup the database requests your Amazon Glacier credentials as well as information it will later use to connect to your AWS account. It also has functionality that allows you to specify multiple accounts/locations.

To create a database from the command line without running a command you can use:

storage-archive.py --initdb

You can specify a database with

storage-archive.py --initdb -db /path/to/MYDB.sqlite

Starting an archive operation

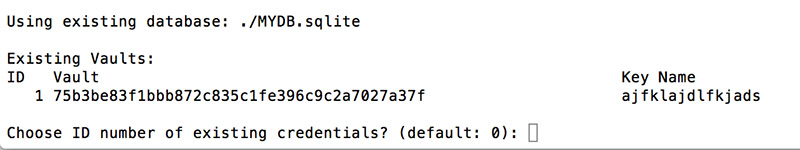

In order to archive data to Amazon Glacier you will want to use the -a/–archive switch. This command will initiate a new database if you dont already have one or if you do not specify the path of your existing database.

storage-archive.py -db /path/to/MYDB.sqlite -a /path/to/directory

Since I created my database earlier using initdb, I am not prompted for my Amazon credentials. Instead I am asked which set of credentials I would like to use. If I would like to specify a different set of credentials or a different location I can press 0 to add a new configuration.

When I am done the archive operation will kick off and the script will parse through all files in the path I specified. Information on the files that are being archived is stored in a table in the database, the files are added to a .tar.gz and when the .tar.gz gets to a certain size (default 500MB) the file is encrypted and uploaded to Amazon. Also during this time the decryption password is stored in the database as well as the Amazon Vault ID.

To change the default max size of an archive you can use the -sz/–asize option. In the following example I changed the size to 100MB.

storage-archive.py -db /path/to/MYDB.sqlite -sz 100 -a /path/to/directory

To change the number of bytes used when generating the encryption password use -ep/–encpass. The default is 15.

storage-archive.py -db /path/to/MYDB.sqlite -ep 12 -a /path/to/directory

Vault Management

Vaults can be created or deleted with the following commands. Of course as with the other commands the database is required so one will be created for you if it isnt specified.

Create a vault using the database

storage-archive.py -vc

Delete a vault using the database

storage-archive.py -vd

Submit an inventory of your vault to Amazon Glacier

storage-archive.py -vi

View the results of your inventory job. This takes hours to come back from Glacier after it has been submitted.

storage-archive.py -vl

Download

storage-archive.py can be downloaded from github: